2 You As a Learning Agent

cite

quote

2.1 Cognitive self-experiment: Under construction

2.2 Mental models

2.3 A note on terminology

These definitions are not absolute. In AI, some definitions are absolute, and some aren’t.

Feynman quote.

2.4 Why so many analogies?

2.5 Why so many stories?

2.6 Why triangles?

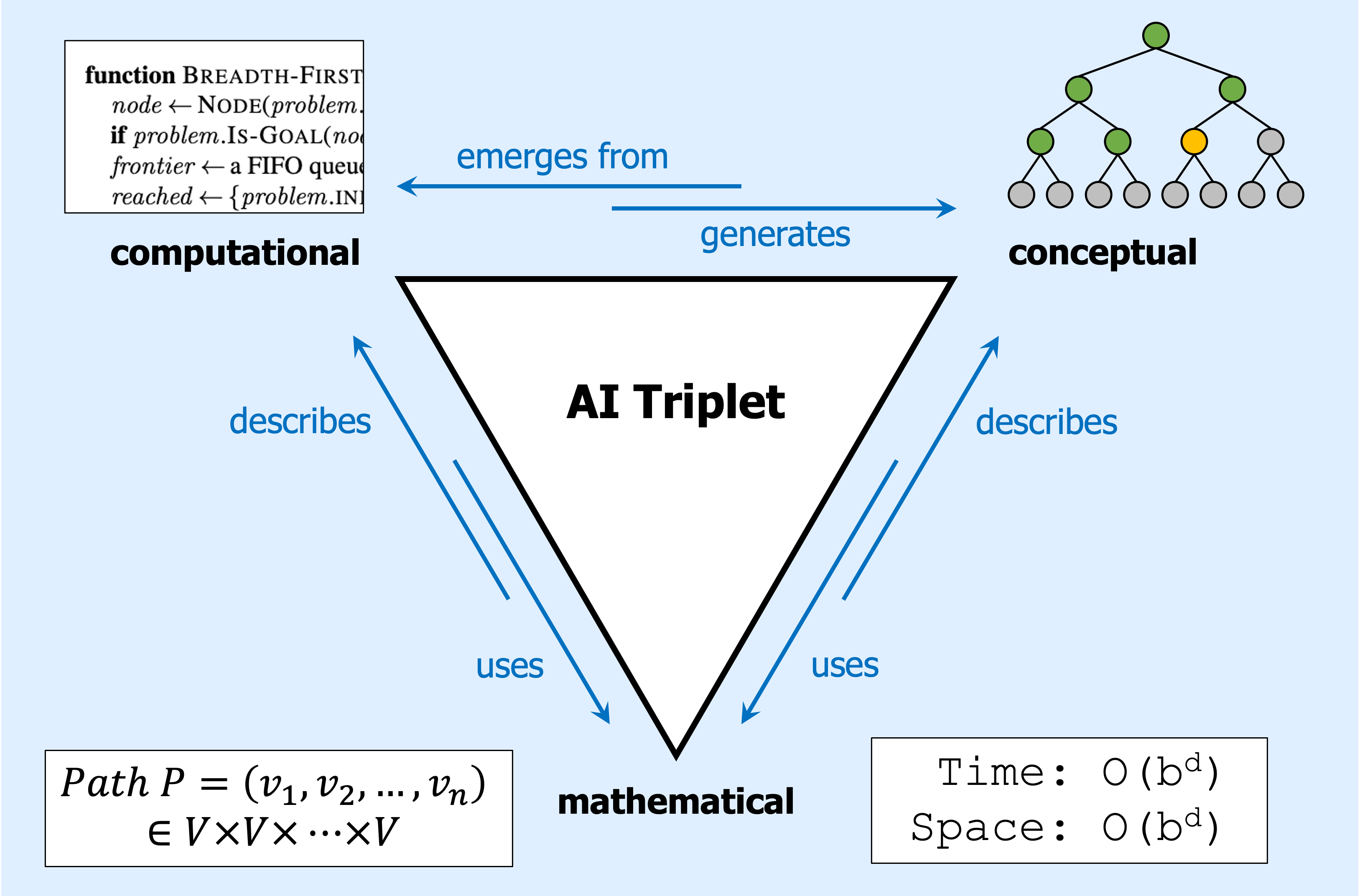

Kunda, M. (2021). The AI triplet: Computational, conceptual, and mathematical representations in AI education. https://arxiv.org/abs/2110.09290

The name of this book comes from an idea I recently had about the different kinds of knowledge that are necessary for understanding AI:

- Computational knowledge about code, how pieces of code run, what data structures are and how they behave, etc.

- Conceptual knowledge about abstract ideas like infinite search spaces; graphs, nodes, and edges; trees and branches; high-dimensional surfaces; infinite time horizons; etc.

- Mathematical knowledge about formal mathematical entities like functions, sets, variables, equations, etc.

These three kinds of knowledge form what I call the “AI triplet.” Expertise in AI often requires being able to think about the same concept at each of these three levels, both separately and in combination.

You can see an illustration of the AI triplet here:

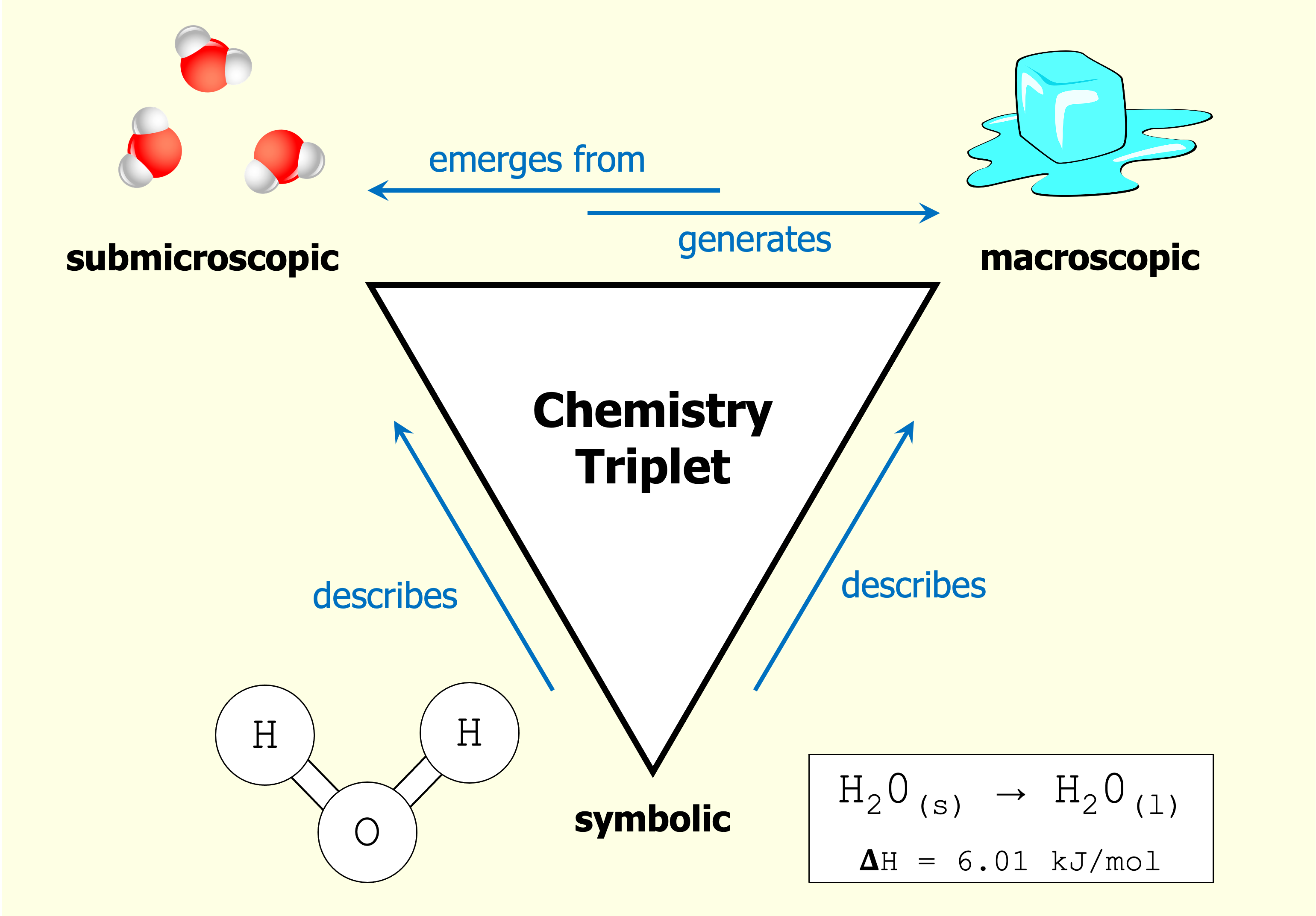

Johnstone, A. H. (1982). Macro- and micro-chemistry. School Science Review, 64, 377–379.

This idea was inspired by the “chemistry triplet,” a similar triad for chemistry that was originally proposed in 1982, and that has been very influential in chemistry education.

In chemistry, as shown below, there is a submicroscopic level that consists of particles and waves and so on. Interactions at this level generate phenomena at the macroscopic level, which includes human-scale things like solids and liquids and different substances. Then, there is a symbolic level that contains all the human-created notation to describe things at the other two levels.

Levels of knowledge in AI are both similar to and slightly different from those in chemistry. In chemistry, just as things at the submicroscopic level generate things at the macroscopic level, so too in AI, things at the computational level generate things at the conceptual level.

In other words, pieces of code in the computational artifacts that we build are a bit like particles in a chemical system. They interact and undergo various behaviors and changes, often in complicated ways that we cannot predict from the starting state of the system. Eventually, just as particles interact to produce what we call a liquid, the pieces of code in AI systems interact to produce conceptual behaviors like searching through a space of possibilities. These conceptual behaviors are eventually combined to produce chess-playing systems, car-driving systems, image recognition systems, and so on—all of the AI capabilities that are comprehensible to us at human scales.

And, finally, just as notation in chemistry is a human-created construct that is useful for describing and modeling chemical systems, in AI, we use math as a formal construct for describing (or designing) things that are happening in our AI systems. Sometimes math shows up in the form of equations and calculations, and sometimes just in the style of formal notation that we use to describe AI problems or elements in an AI system.

Throughout this book, new topics and ideas will generally be introduced at the conceptual level, and then we will add discussion of what is happening at computational and mathematical levels. Eventually, you will be able to more easily jump around the various levels when thinking about a given AI topic.

2.7 Why official AI textbooks are a good choice (at least initially)

I am devoting an entire subsection to this topic because I believe it is quite important.

The following section include links to other helpful and interesting resources for learning about AI. But first, a brief discussion of AI information in today’s Internet world.

There are a lot of AI resources out there on the Internet right now, including many “informal” ones like blog posts, Medium articles, Github projects, discussion forums, YouTube videos, and the list goes on. While it is exciting to see so many people excited about AI and excited to share their thoughts about it, the result has become something of an information maelstrom, awash with valuable, totally legit pieces of treasure mixed right in with random flotsam and jetsam and dead fish and stolen goods.

To be more explicit, while there is a lot of really great AI material out there, I have also seen an unfortunate proportion of AI material that is (a) flat-out wrong; (b) plagiarized; or (c) both.

The obvious suggestion would be to follow the usual sorts of best practices for evaluating the credibility of your sources. For example, is the author actually named; do they present their credentials; what is the website domain; how did you find the particular resource, etc.

I remember one post that almost made sense to me but didn’t quite…and then I searched more and found that it was essentially just a bunch of sentences that had been cribbed from a couple of other sources and strung together.

However, the current information maelstrom is just so maelstrom-y that I think these usual practices don’t actually weed out all of the bad information. For example, even as an AI expert, I sometimes have trouble figuring out if a blog post actually makes sense or not.

I remember finding one site that was the main .com domain of a very reputable and globally known technology company, and I was shocked to find pages in their “AI knowledge base” that were just flat out incorrect (e.g., claiming that a particular well-known AI algorithm does a particular thing, which it fact it doesn’t do at all). I guess the company wasn’t doing very good quality control on those pages on their site, but it was really unfortunate, because it definitely looked like a legitimate resource that one ought to be able to trust.

To take another example, I have seen objectively incorrect information on website domains that looked quite trustworthy to me.

In my own self-guided learning, I have found that it is extremely difficult for me to simultaneously learn a new AI topic and be screening for the trustworthiness of information at the same time. I think that part of the difficulty is cognitive overload, and part of the difficulty is that if I don’t know a topic already, then it is almost impossible for me to judge the correctness of what I am reading about it.

Therefore, my strong, strong recommendation for learning new AI things at any level is:

1. When first learning a particular AI topic, use a well-established textbook or other resource that you know is reliable.

2. Then, once you are solid on the basics of that topic, you will be MUCH better equipped to swim around in the maelstrom on your own and be able to sift out the treasures from the flotsam.

2.8 Other resources

With that said, here are some recommended resources for further reading, divided into three lists:

- various articles and books that cover technical aspects of AI in general or specific subfields.

- ai and cognitive science stuff

- readings on AI and society - in the next chapter.

2.8.1 General AI resources

Melanie Mitchell’s very recent book Artificial Intelligence: A Guide for Thinking Humans gives a non-technical but content-rich overview of the broad field of AI, where it has been and where it is going, and descriptions of major approaches.

Under construction. see “What exactly IS AI?” in the excellent AI overview written by Selmer Bringsjord and Naveen Sundar Govindarajulu in the Stanford Encyclopedia of Philosophy (SEP). (I find SEP to be an indispensable resource for learning about big ideas in many areas of AI, cognitive science, math, and of course philosophy.)

For more in-depth information about many of the AI topics covered in this book, Artificial Intelligence: A Modern Approach by Stuart Russell and Peter Norvig (often called “AIMA” or “Russell & Norvig”) continues to be a standard in the field. I still regularly use my old, dark-green, second-edition copy as a desk reference. In addition to the coverage of technical topics, there is a really fabulous “Bibliographical and Historical Notes” section at the end of each chapter that lays out how the major developments in each area unfolded over time.

Under construction: Add Poole & Mackworth.

UC Berkeley graciously shares many materials from their undergraduate AI course CS 188, including lecture slides, homework problems, and the widely used Pacman AI Projects. Their treatment of topics tends to follow the Russell & Norvig textbook pretty closely.

For more on machine learning, Tom Mitchell’s Machine Learning textbook gives an excellent and digestible overview of major approaches and also theoretical angles. (Do not be put off by the 1997 publication date! The fundamentals abide.)

An Introduction to Statistical Learning by Gareth James, Daniela Witten, Trevor Hastie, and Rob Tibshirani;

For more on neural networks , I recommend Michael Nielsen’s Neural Networks and Deep Learning as a superb introduction, and Deep Learning, by Ian Goodfellow, Yoshua Bengio, and Aaron Courville, for more advanced topics.

For more on reinforcement learning, Richard Sutton and Andrew Barto’s Reinforcement Learning: An Introduction is another standard in the field.

2.8.2 Thought-provoking readings on AI and cognitive science

These are some of my favorite readings, ranging from the casual to technical, that provide all kinds of food for thought for AI people to chew on.

Some are AI-centric, and others are more about human or animal cognition. Many of the latter make for VERY interesting thought experiments, if we consider whatever example of intelligence is being presented and then ask ourselves, “How might this kind of intelligence work, from a computational perspective?” And its corollary, “How could we build an AI system that would ‘think’ in this way?”

(Why read this random books? AI = combination of learning technical skills and thinking about big ideas. No accident that many of AI’s major advances came from cognitive scientists - list them.)

Readings that are more AI-ish

- Mind Design II, edited by John Haugeland. Reading this book was one of the most influential intellectual journeys I ever took. Starting with Turing’s classic paper on “Computing Machinery and Intelligence,” going through key ideas from thinkers like Newell & Simon, Dennett, Minsky, and Searle (the famous Chinese room argument), on to Rumelhart’s early visions of neural networks, Clark’s musings on what symbols really are, and Brooks’ surprisingly effective yet simple robot architectures, and so forth.

Readings about cognitive science

The Life of Cognitive Science.

Thinking in Pictures: My Life with Autism.

Born on a Blue Day

Oliver Sacks

Descartes’ Error

Readings about science and scientists

Feynman

Turing biography

Ramanujan

A Beautiful Mind

Creating Scientific Concepts

Readings about animal cognition

The Parrot’s Lament

Alex and Me

Cultural Origins of Human Cognition

Readings about other human achievements

Wright brothers book, followed by the gossamer albatross

Design of Everyday Things

2.9 Exercises

Under construction.

Page built: 2022-12-06 using R version 4.1.1 (2021-08-10)